Conversational Agents

Conversation Agents (CAs) are agents, using natural language, emulating human-to-human communication. Industries, especially in customer service, adopt CAs to improve cost efficiency and enhance user experiences. As these technologies have grown more advanced and widely used, they have raised important questions about how people perceive and interact with them. Acceptance of algorithms, particularly in the form of CAs, is a critical issue. While the technical capabilities of CAs have evolved significantly, through the upcoming of Large-Lange-Modules (LLMs), the human factors influencing their adoption remain a central concern. The research group addresses one of these key challenges: algorithm aversion.

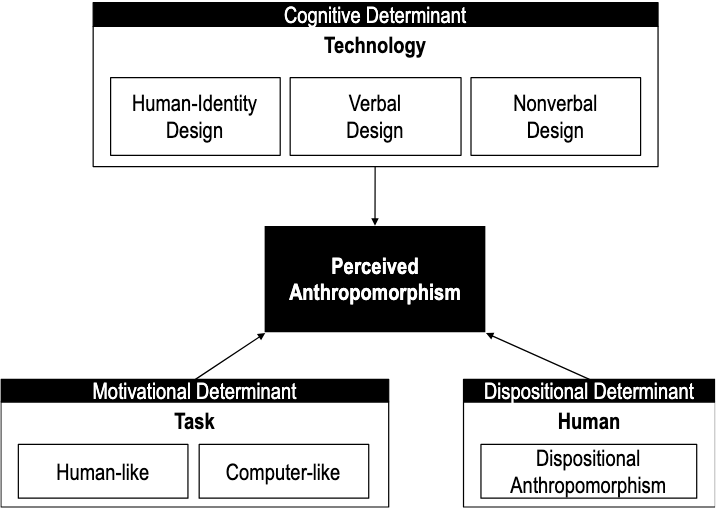

One way to reduce algorithm aversion is to anthropomorphize the CA, creating a more human-like impression that fosters trust. In our research, we explored how to design CAs to appear human-like (Seeger et al., 2018). Building on this, we developed a framework that examines the interaction between various design cues, demonstrating their combined impact (Seeger et al., 2021).

Studying algorithm aversion raised the question of why people might be differentially averse in different contexts. A study we conducted revealed that individuals desire to feel more human in pro-social situations, leading to heightened algorithm aversion (Heßler et al., 2022). Combining our findings, we implemented a CA with and without voice, which outperform a filter version and revealed that voice is not as effective as commonly believed (Heßler et al., 2023).

In our research we want to better understand the processes leading to algorithm aversion and find ways to mitigate them. Finally, we are addressing these issues especially in areas of high sensitivity, like making more subjective and emotional decision, e.g.: spending money to a charity.

- Seeger, A.-M., Pfeiffer, J., & Heinzl, A. (2018). Designing anthropomorphic conversational agents: Development and empirical evaluation of a design framework. 39th International Conference on Information Systems, ICIS 2018: Proceedings.

- Seeger, A.-M., Pfeiffer, J., & Heinzl, A. (2021). Texting with humanlike conversational agents: Designing for anthropomorphism. Journal of The Association for Information Systems, 22(4), 931–967. https://doi.org/10.17705/1jais.00685

- Heßler, P. O., Pfeiffer, J., & Hafenbrädl, S. (2022). When self-humanization leads to algorithm aversion. Business & Information Systems Engineering, 64(3), 275–292. https://doi.org/10.1007/s12599-022-00754-y

- Heßler, P. O., Pfeiffer, J., & Unfried, M. (2023). Conversational Agent with Voice: How Social Presence Influence the Iser Behavior in Microlending Decisions. ECIS 2023 Research Papers, 315. https://aisel.aisnet.org/ecis2023_rp/315/